How to Become Better With Deepseek In 10 Minutes

페이지 정보

작성자 Pauline Knowles 작성일25-03-11 07:56 조회2회 댓글0건관련링크

본문

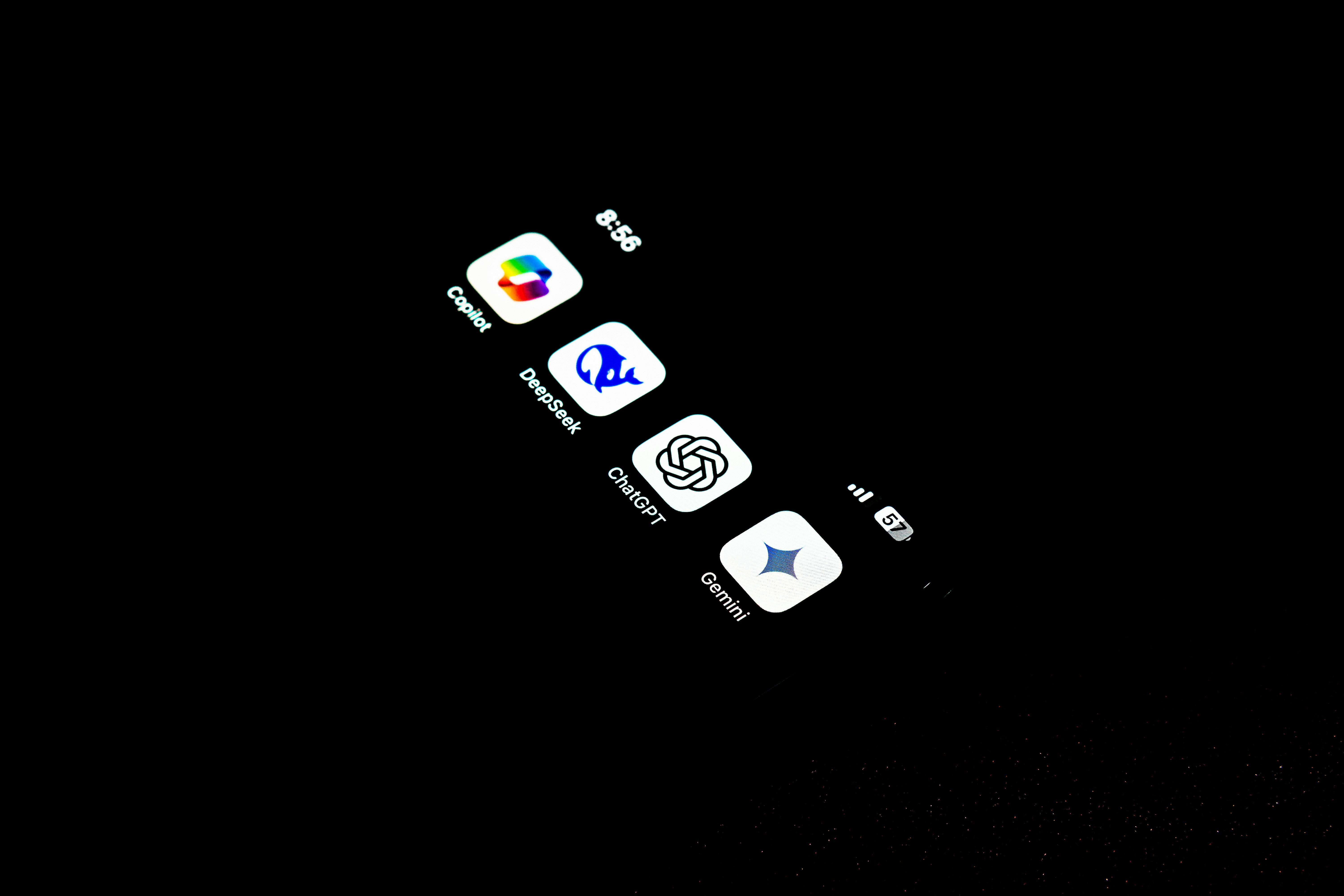

Connect with NowSecure to uncover the risks in both the cell apps you build and third-party apps similar to DeepSeek. Explore advanced instruments like file analysis or Deepseek Chat V2 to maximize productivity. Even different GPT models like gpt-3.5-turbo or gpt-4 were higher than DeepSeek-R1 in chess. So while it’s been unhealthy news for the massive boys, it may be excellent news for small AI startups, significantly since its fashions are open source. The reasons should not very accurate, and the reasoning is just not excellent. Companies like OpenAI and Google are investing closely in closed techniques to keep up a aggressive edge, but the growing quality and adoption of open-source options are challenging their dominance. These corporations will undoubtedly transfer the price to its downstream consumers and consumers. It value roughly 200 million Yuan. The longest game was 20 moves, and arguably a very dangerous recreation. The typical game length was 8.3 strikes. The median sport size was 8.Zero moves. GPT-2 was a bit more constant and performed higher moves. More not too long ago, I’ve rigorously assessed the ability of GPTs to play legal strikes and to estimate their Elo ranking. The tldr; is that gpt-3.5-turbo-instruct is the most effective GPT mannequin and is taking part in at 1750 Elo, a really fascinating outcome (despite the era of illegal moves in some video games).

Many individuals ask, "Is DeepSeek higher than ChatGPT? If it’s not "worse", it's at the least not higher than GPT-2 in chess. On the plus facet, it’s simpler and simpler to get began with CPU inference. DeepSeek-V3 delivers groundbreaking improvements in inference pace in comparison with earlier models. 33. Can DeepSeek-V3 help with private productivity? To tackle the difficulty of communication overhead, DeepSeek-V3 employs an revolutionary DualPipe framework to overlap computation and communication between GPUs. This sophisticated system employs 671 billion parameters, though remarkably solely 37 billion are energetic at any given time. Users are more and more putting delicate information into generative AI systems - all the pieces from confidential business info to highly private details about themselves. The protection of sensitive knowledge additionally depends on the system being configured properly and repeatedly being secured and monitored effectively. A helpful answer for anyone needing to work with and preview JSON data effectively. You should utilize that menu to talk with the Ollama server without needing an internet UI. Instead of enjoying chess in the chat interface, I determined to leverage the API to create several games of DeepSeek-R1 in opposition to a weak Stockfish. By weak, I mean a Stockfish with an estimated Elo score between 1300 and 1900. Not the state-of-artwork Stockfish, but with a score that is not too high.

The opponent was Stockfish estimated at 1490 Elo. There is a few diversity within the illegal strikes, i.e., not a scientific error in the model. What is much more concerning is that the model quickly made illegal moves in the sport. It is not ready to change its thoughts when unlawful moves are proposed. The mannequin is just not able to understand that strikes are illegal. When authorized moves are played, the quality of strikes may be very low. There are additionally self contradictions. First, there is the fact that it exists. Mac with 18ish GB (accounting for the truth that the OS and other apps/processes need RAM, too)? The longest game was solely 20.Zero moves (forty plies, 20 white moves, 20 black moves). 57 The ratio of unlawful strikes was much decrease with GPT-2 than with DeepSeek-R1. Could you have got extra profit from a larger 7b mannequin or does it slide down too much? On the whole, the model shouldn't be in a position to play authorized moves. 4: unlawful moves after ninth transfer, clear benefit rapidly in the game, give a queen without spending a dime. In any case, it offers a queen for free. The level of play is very low, with a queen given without spending a dime, and a mate in 12 moves.

댓글목록

등록된 댓글이 없습니다.