8 Methods Of Deepseek Domination

페이지 정보

작성자 Lilla Holeman 작성일25-02-27 21:16 조회2회 댓글0건관련링크

본문

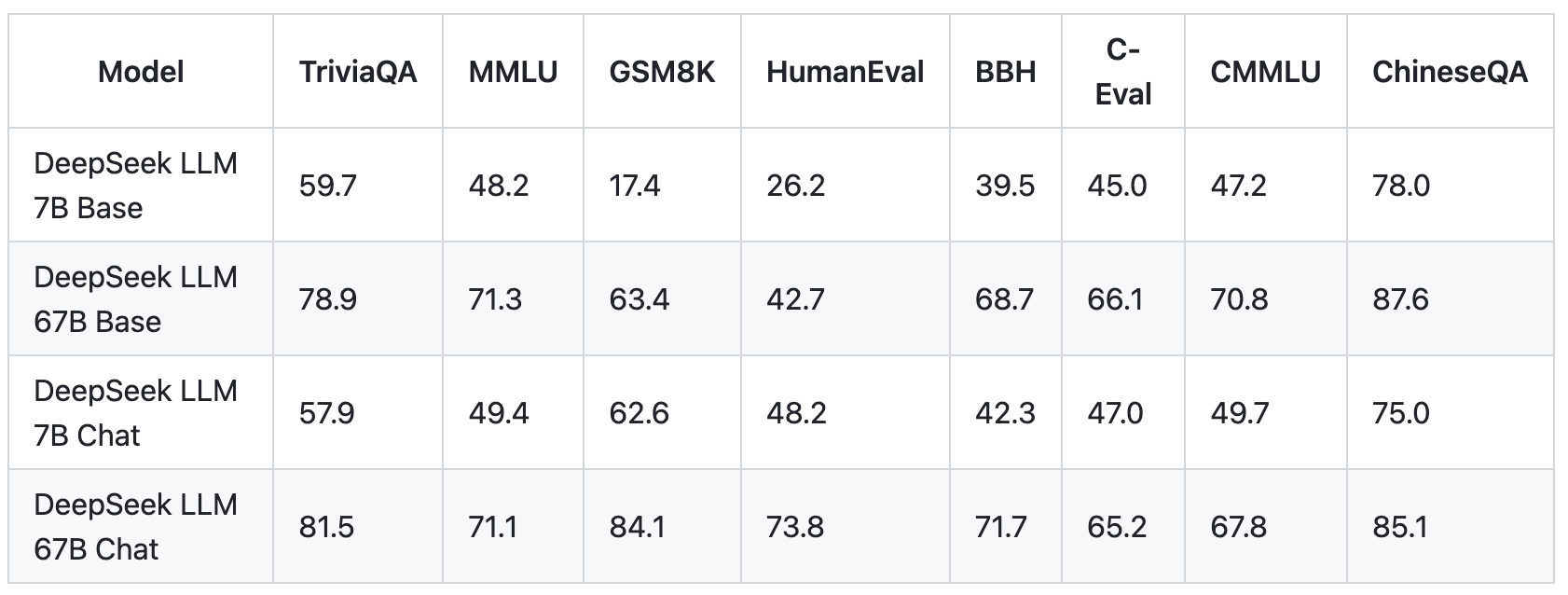

DeepSeek (深度求索), founded in 2023, is a Chinese company devoted to making AGI a actuality. Neither Feroot nor the other researchers observed information transferred to China Mobile when testing logins in North America, but they couldn't rule out that knowledge for some users was being transferred to the Chinese telecom. High-Flyer (in Chinese (China)). In 2019, High-Flyer arrange a SFC-regulated subsidiary in Hong Kong named High-Flyer Capital Management (Hong Kong) Limited. Today, a undertaking named FlashMLA was released. The Chat versions of the 2 Base models was launched concurrently, obtained by training Base by supervised finetuning (SFT) adopted by direct coverage optimization (DPO). Like OpenAI, the hosted version of DeepSeek Chat might gather customers' information and use it for training and improving their models. DeepSeek v3 affords related or superior capabilities in comparison with fashions like ChatGPT, with a significantly decrease value. This strategy of having the ability to distill a larger model&aposs capabilities right down to a smaller model for portability, accessibility, pace, and price will bring about loads of potentialities for making use of artificial intelligence in locations where it might have in any other case not been doable. Distilled models are very totally different to R1, which is a large model with a totally completely different model structure than the distilled variants, and so are not directly comparable when it comes to capability, however are as a substitute built to be more smaller and environment friendly for extra constrained environments.

DeepSeek (深度求索), founded in 2023, is a Chinese company devoted to making AGI a actuality. Neither Feroot nor the other researchers observed information transferred to China Mobile when testing logins in North America, but they couldn't rule out that knowledge for some users was being transferred to the Chinese telecom. High-Flyer (in Chinese (China)). In 2019, High-Flyer arrange a SFC-regulated subsidiary in Hong Kong named High-Flyer Capital Management (Hong Kong) Limited. Today, a undertaking named FlashMLA was released. The Chat versions of the 2 Base models was launched concurrently, obtained by training Base by supervised finetuning (SFT) adopted by direct coverage optimization (DPO). Like OpenAI, the hosted version of DeepSeek Chat might gather customers' information and use it for training and improving their models. DeepSeek v3 affords related or superior capabilities in comparison with fashions like ChatGPT, with a significantly decrease value. This strategy of having the ability to distill a larger model&aposs capabilities right down to a smaller model for portability, accessibility, pace, and price will bring about loads of potentialities for making use of artificial intelligence in locations where it might have in any other case not been doable. Distilled models are very totally different to R1, which is a large model with a totally completely different model structure than the distilled variants, and so are not directly comparable when it comes to capability, however are as a substitute built to be more smaller and environment friendly for extra constrained environments.

Obviously the last 3 steps are where nearly all of your work will go. Small Agency of the Year" and the "Best Small Agency to Work For" within the U.S. 1. How Does DeepSeek Work? Inquisitive about what makes DeepSeek so irresistible? DeepSeek R1, the new entrant to the large Language Model wars has created quite a splash over the previous couple of weeks. 2. Open-sourcing and making the mannequin freely obtainable follows an asymmetric technique to the prevailing closed nature of a lot of the model-sphere of the larger gamers. DeepSeek needs to be commended for making their contributions Free DeepSeek r1 and open. 1. The contributions to the state-of-the-art and the open analysis helps move the sphere ahead where everybody advantages, not only a few extremely funded AI labs constructing the following billion dollar mannequin. The model was however affected by poor readability and language-mixing and is just an interim-reasoning mannequin constructed on RL principles and self-evolution. RL mimics the method by means of which a baby would be taught to stroll, by way of trial, error and first rules. OpenAI&aposs o1-sequence models have been the primary to realize this successfully with its inference-time scaling and Chain-of-Thought reasoning. Although, it did degrade in its language capabilities during the method, its Chain-of-Thought (CoT) capabilities for fixing complex issues was later used for additional RL on the DeepSeek-v3-Base model which turned R1.

Obviously the last 3 steps are where nearly all of your work will go. Small Agency of the Year" and the "Best Small Agency to Work For" within the U.S. 1. How Does DeepSeek Work? Inquisitive about what makes DeepSeek so irresistible? DeepSeek R1, the new entrant to the large Language Model wars has created quite a splash over the previous couple of weeks. 2. Open-sourcing and making the mannequin freely obtainable follows an asymmetric technique to the prevailing closed nature of a lot of the model-sphere of the larger gamers. DeepSeek needs to be commended for making their contributions Free DeepSeek r1 and open. 1. The contributions to the state-of-the-art and the open analysis helps move the sphere ahead where everybody advantages, not only a few extremely funded AI labs constructing the following billion dollar mannequin. The model was however affected by poor readability and language-mixing and is just an interim-reasoning mannequin constructed on RL principles and self-evolution. RL mimics the method by means of which a baby would be taught to stroll, by way of trial, error and first rules. OpenAI&aposs o1-sequence models have been the primary to realize this successfully with its inference-time scaling and Chain-of-Thought reasoning. Although, it did degrade in its language capabilities during the method, its Chain-of-Thought (CoT) capabilities for fixing complex issues was later used for additional RL on the DeepSeek-v3-Base model which turned R1.

3. It reminds us that its not just a one-horse race, and it incentivizes competitors, which has already resulted in OpenAI o3-mini a cheap reasoning mannequin which now shows the Chain-of-Thought reasoning. R1 was the first open analysis project to validate the efficacy of RL straight on the base model without counting on SFT as a primary step, which resulted within the model developing advanced reasoning capabilities purely by self-reflection and self-verification. Notably, it's the primary open analysis to validate that reasoning capabilities of LLMs might be incentivized purely through RL, without the need for SFT. "In the first stage, two separate specialists are educated: one which learns to stand up from the ground and one other that learns to score towards a set, random opponent. 3. GPQA Diamond: A subset of the bigger Graduate-Level Google-Proof Q&A dataset of difficult questions that domain experts persistently answer accurately, however non-specialists struggle to reply accurately, even with intensive web entry. As consultants warn of potential dangers, this milestone sparks debates on ethics, safety, and regulation in AI improvement.

That is another key contribution of this know-how from DeepSeek, which I consider has even further potential for democratization and accessibility of AI. AI advantages, Trump might search to advertise the country’s AI know-how. The much less usable or nearly ineffective in widely diversified duties, they might even perceive a task in-depth. This means that rather than doing tasks, it understands them in a method that's extra detailed and, thus, a lot more environment friendly for the job at hand. This permits intelligence to be introduced closer to the edge, to permit faster inference at the purpose of experience (comparable to on a smartphone, or on a Raspberry Pi), which paves approach for extra use cases and possibilities for innovation. It raises a lot of thrilling possibilities and is why DeepSeek-R1 is one of the vital pivotal moments of tech historical past. Offers detailed info on DeepSeek's numerous fashions and their growth history. You should use GGUF models from Python using the llama-cpp-python or ctransformers libraries. Having CPU instruction units like AVX, AVX2, AVX-512 can additional enhance efficiency if accessible.

댓글목록

등록된 댓글이 없습니다.