Deepseek China Ai - What Do These Stats Really Imply?

페이지 정보

작성자 Kourtney Higbee 작성일25-02-23 18:19 조회2회 댓글0건관련링크

본문

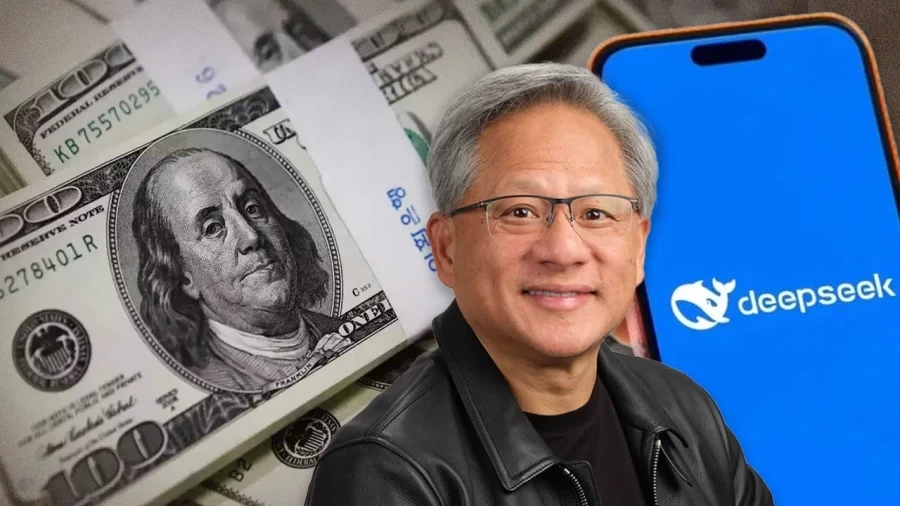

In 2022, the corporate donated 221 million Yuan to charity because the Chinese authorities pushed companies to do more within the title of "common prosperity". However, quite a few safety concerns have surfaced about the company, prompting personal and authorities organizations to ban using DeepSeek. This feat was made doable by innovative coaching strategies and the strategic use of downgraded NVIDIA chips, circumventing hardware restrictions imposed by U.S. OpenAI, Google and Meta, however does so utilizing solely about 2,000 older era laptop chips manufactured by U.S.-primarily based industry chief Nvidia whereas costing only about $6 million value of computing energy to train. In "STAR Attention: Efficient LLM INFERENCE OVER Long SEQUENCES," researchers Shantanu Acharya and Fei Jia from NVIDIA introduce Star Attention, a two-phase, block-sparse attention mechanism for efficient LLM inference on long sequences. Up so far, the big AI corporations have been keen to invest billions into infrastructure to allow marginal benefits over their rivals.

But it surely does fit right into a broader development according to which Chinese corporations are keen to make use of US technology growth as a leaping-off level for their very own analysis. But it's best to know that these are the dangers and you need to certainly be careful about what you type into that little window. We agree to so much once we tick that little phrases and providers field each time we download any new app. And I think lots of people really feel as in the event that they're so uncovered in a privateness sense anyway, that what's yet one more app? DeepSeek’s AI assistant grew to become the No. 1 downloaded Free DeepSeek Chat app on Apple’s iPhone retailer on Tuesday afternoon and its launch made Wall Street tech superstars' stocks tumble. The San Francisco-primarily based tech company reported 400 million weekly lively customers as of February, up 33% from 300 million in December, the company’s chief working officer, Brad Lightcap, instructed CNBC in an interview yesterday. So important is R1’s reliance on OpenAI’s system that in this CNBC coverage, the reporter asks DeepSeek’s R1 "What model are you?

However, they are not clearly superior to GPT’s or Gemini fashions throughout the board in terms of performance, pace, and accuracy," Kulkarni stated, referring to the varied models the AI platforms use. Integration with Existing Systems: DeepSeek can seamlessly combine with varied knowledge platforms and software program, making certain clean workflows across completely different organisational environments. DeepSeek showed that, given a excessive-performing generative AI model like OpenAI’s o1, fast-followers can develop open-source models that mimic the high-end performance shortly and at a fraction of the cost. First, this development-a Chinese company having built a model that rivals one of the best US models-does make it look like China is closing the know-how gap between itself and the US with respect to generative AI. DeepSeek claims that R1’s performance on several benchmark assessments rivals that of one of the best US-developed fashions, and especially OpenAI’s o1 reasoning mannequin, one in all the massive language models behind ChatGPT. DeepSeek launched its R1 mannequin that rivals the best American models on January 20th-inauguration day.

However, they are not clearly superior to GPT’s or Gemini fashions throughout the board in terms of performance, pace, and accuracy," Kulkarni stated, referring to the varied models the AI platforms use. Integration with Existing Systems: DeepSeek can seamlessly combine with varied knowledge platforms and software program, making certain clean workflows across completely different organisational environments. DeepSeek showed that, given a excessive-performing generative AI model like OpenAI’s o1, fast-followers can develop open-source models that mimic the high-end performance shortly and at a fraction of the cost. First, this development-a Chinese company having built a model that rivals one of the best US models-does make it look like China is closing the know-how gap between itself and the US with respect to generative AI. DeepSeek claims that R1’s performance on several benchmark assessments rivals that of one of the best US-developed fashions, and especially OpenAI’s o1 reasoning mannequin, one in all the massive language models behind ChatGPT. DeepSeek launched its R1 mannequin that rivals the best American models on January 20th-inauguration day.

On January twentieth, a Chinese company known as "DeepSeek" released a brand new "reasoning" model, generally known as R1. But DeepSeek, a Chinese AI model, is rewriting the narrative. DeepSeek, by contrast, claims that it was ready to achieve similar capabilities with simply $5.6 million (and without the innovative chips that the US CHIPS Act has prevented China from buying). OpenAI claims that DeepSeek violated its terms of service through the use of OpenAI’s o1 mannequin to distill R1. And, extra importantly, DeepSeek claims to have done it at a fraction of the cost of the US-made models. Given this background, it comes as no surprise at all that DeepSeek would violate OpenAI’s phrases of service to provide a competitor mannequin with similar efficiency at a decrease training cost. DeepSeek developed R1 utilizing a way known as "distillation." Without going into a lot element right here, distillation permits developers to prepare a smaller (and cheaper) model by utilizing both the output information or the probability distribution of a larger mannequin to train or tune the smaller one. No one knows exactly how a lot the big American AI corporations (OpenAI, Google, and Anthropic) spent to develop their highest performing fashions, but in keeping with reporting Google invested between $30 million and $191 million to train Gemini and OpenAI invested between $forty one million and $78 million to train GPT-4.

댓글목록

등록된 댓글이 없습니다.