If you Wish To Be A Winner, Change Your Deepseek Ai Philosophy Now!

페이지 정보

작성자 Almeda 작성일25-02-07 06:17 조회2회 댓글0건관련링크

본문

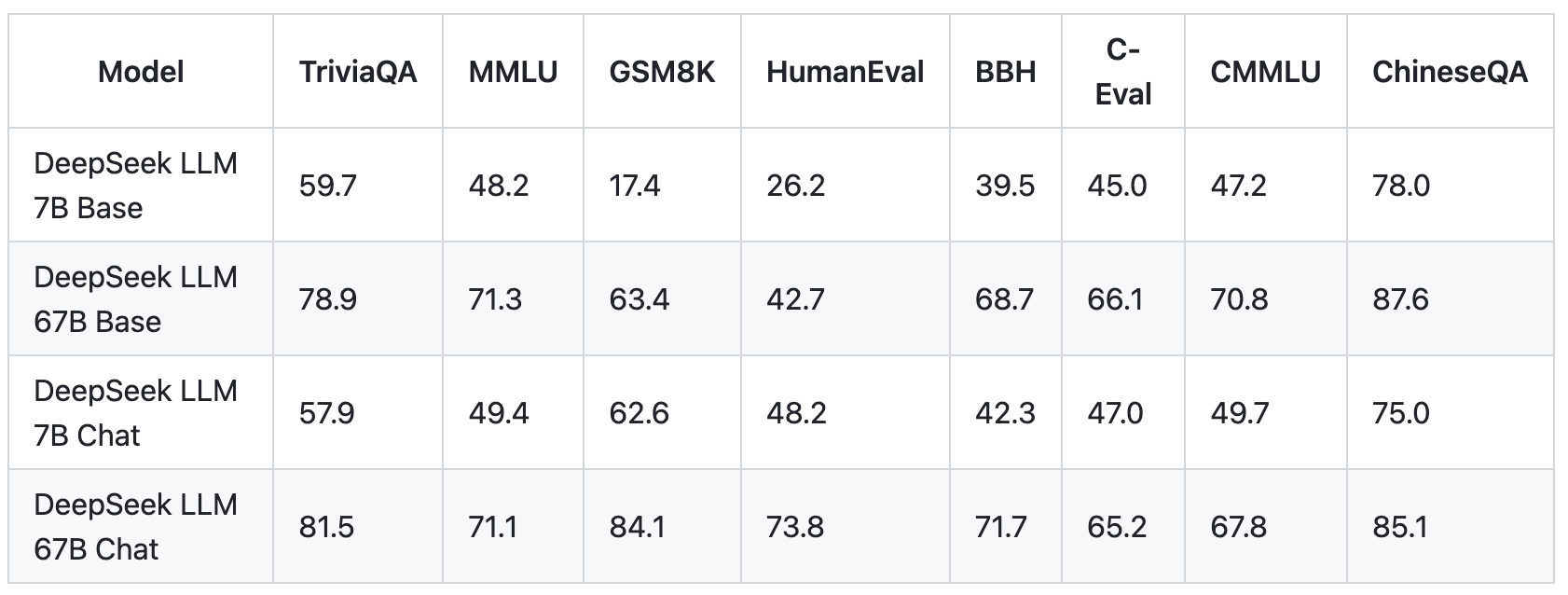

![]() That’s why it’s an excellent thing whenever any new viral AI app convinces folks to take another look at the technology. ChatGPT on Apple's online app store. After performing the benchmark testing of DeepSeek R1 and ChatGPT let's see the real-world activity experience. DeepSeek has quickly change into a key participant in the AI business by overcoming vital challenges, reminiscent of US export controls on superior GPUs. Computing is normally powered by graphics processing items, or GPUs. The computing arms race won't be won by way of alarmism or reactionary overhauls. Since China is restricted from accessing cutting-edge AI computing hardware, it won't be sensible of DeepSeek to reveal its AI arsenal, which is why the skilled notion is that DeepSeek has power equal to its competitors, however undisclosed for now. But, this also means it consumes important amounts of computational power and energy assets, which isn't only costly but also unsustainable.

That’s why it’s an excellent thing whenever any new viral AI app convinces folks to take another look at the technology. ChatGPT on Apple's online app store. After performing the benchmark testing of DeepSeek R1 and ChatGPT let's see the real-world activity experience. DeepSeek has quickly change into a key participant in the AI business by overcoming vital challenges, reminiscent of US export controls on superior GPUs. Computing is normally powered by graphics processing items, or GPUs. The computing arms race won't be won by way of alarmism or reactionary overhauls. Since China is restricted from accessing cutting-edge AI computing hardware, it won't be sensible of DeepSeek to reveal its AI arsenal, which is why the skilled notion is that DeepSeek has power equal to its competitors, however undisclosed for now. But, this also means it consumes important amounts of computational power and energy assets, which isn't only costly but also unsustainable.

AI startups, academic labs, and technology giants in attempts to acquire algorithms, supply code, and proprietary knowledge that energy machine studying systems. SAN FRANCISCO, USA - Developers at leading US AI firms are praising the DeepSeek AI fashions that have leapt into prominence whereas also trying to poke holes within the notion that their multi-billion dollar know-how has been bested by a Chinese newcomer’s low-value different. These models will not be just extra environment friendly-they're additionally paving the best way for broader AI adoption throughout industries. So do you assume that that is the way that AI is playing out? Samples look very good in absolute phrases, we’ve come a long way. When given a text field for the user input, bots look for acquainted phrases inside the question and then match the key phrases with an obtainable response. The mixed impact is that the experts grow to be specialized: Suppose two specialists are each good at predicting a sure form of input, but one is barely higher, then the weighting perform would finally learn to favor the better one. This will or is probably not a probability distribution, however in both instances, its entries are non-negative. Sharma, Shubham (29 May 2024). "Mistral proclaims Codestral, its first programming centered AI model".

Abboud, Leila; Levingston, Ivan; Hammond, George (19 April 2024). "Mistral in talks to boost €500mn at €5bn valuation". Abboud, Leila; Levingston, Ivan; Hammond, George (eight December 2023). "French AI start-up Mistral secures €2bn valuation". Goldman, Sharon (8 December 2023). "Mistral AI bucks release development by dropping torrent link to new open supply LLM". Marie, Benjamin (15 December 2023). "Mixtral-8x7B: Understanding and Running the Sparse Mixture of Experts". AI, Mistral (eleven December 2023). "La plateforme". Metz, Cade (10 December 2023). "Mistral, French A.I. Start-Up, Is Valued at $2 Billion in Funding Round". Coldewey, Devin (27 September 2023). "Mistral AI makes its first giant language mannequin free for everybody". The consultants could also be arbitrary functions. This encourages the weighting operate to study to pick out only the consultants that make the precise predictions for every enter. Elizabeth Economy: Right, right. Mr. Allen: Right, you talked about - you talked about EVs. Wiggers, Kyle (29 May 2024). "Mistral releases Codestral, its first generative AI mannequin for code". AI, Mistral (24 July 2024). "Large Enough". AI, Mistral (16 July 2024). "MathΣtral". AI, Mistral (29 May 2024). "Codestral: Hello, World!".

These ultimate two charts are merely as an example that the current results might not be indicative of what we can expect sooner or later. DeepSeek-V2 was released in May 2024. It provided efficiency for a low worth, and turned the catalyst for China's AI model value warfare. Unlike Codestral, it was launched under the Apache 2.Zero license. Apache 2.0 License. It has a context size of 32k tokens. Codestral has its own license which forbids the usage of Codestral for commercial functions. Codestral Mamba is based on the Mamba 2 structure, which permits it to generate responses even with longer enter. While earlier releases often included both the base model and the instruct model, solely the instruct model of Codestral Mamba was launched. So while diverse coaching datasets improve LLMs’ capabilities, they also improve the chance of producing what Beijing views as unacceptable output. For instance, the Canvas characteristic in ChatGPT and the Artefacts feature in Claude make organizing your generated output a lot simpler. DeepSick’s AI assistant lacks many superior features of ChatGPT or Claude.

These ultimate two charts are merely as an example that the current results might not be indicative of what we can expect sooner or later. DeepSeek-V2 was released in May 2024. It provided efficiency for a low worth, and turned the catalyst for China's AI model value warfare. Unlike Codestral, it was launched under the Apache 2.Zero license. Apache 2.0 License. It has a context size of 32k tokens. Codestral has its own license which forbids the usage of Codestral for commercial functions. Codestral Mamba is based on the Mamba 2 structure, which permits it to generate responses even with longer enter. While earlier releases often included both the base model and the instruct model, solely the instruct model of Codestral Mamba was launched. So while diverse coaching datasets improve LLMs’ capabilities, they also improve the chance of producing what Beijing views as unacceptable output. For instance, the Canvas characteristic in ChatGPT and the Artefacts feature in Claude make organizing your generated output a lot simpler. DeepSick’s AI assistant lacks many superior features of ChatGPT or Claude.

댓글목록

등록된 댓글이 없습니다.